Have you ever received a spam email and wondered how your email provider was able to identify it as spam? Well, the answer is likely machine learning! One common type of machine learning problem is called classification. The goal is to predict the correct class labels for a given set of observations. For example, we could train a classifier to identify whether an email is spam or not or to classify images of animals into different species. But before we can use a classifier in a real-world setting, we need to evaluate its performance to understand how well it can correctly classify observations. There are several tools and techniques we can use to do this, including the confusion matrix, error metrics, and the ROC curve. In this article, we’ll dive into these evaluation methods and see how they can help us understand the capabilities of our classifier.

This tutorial is divided into two parts: a conceptual introduction to evaluating classification performance and a hands-on example using Python and Scikit-Learn. In the first part, we will discuss some of the common error metrics that are used to evaluate the performance of a classifier. This includes the confusion matrix, error metrics, and the ROC curve. The second part of the tutorial is hands-on. We use Python and Scikit-Learn to build a breast cancer detection model classifying tissue samples as benign or malignant. We then apply various techniques to evaluate the model’s performance.

Why even bother Measuring Classification Performance?

Measuring classification performance in machine learning is important because it allows us to evaluate how well a model is able to predict the class of a given input accurately. This is important because the ultimate goal of many machine learning models is to make accurate predictions in real-world applications.

There are several reasons why it is important to measure classification performance. First, by measuring performance, we can determine whether a model is able to make accurate predictions. If a model cannot make accurate predictions, it may not be useful for the task it was designed for. Second, by measuring performance, we can compare the performance of different models and choose the best one for a given task. This can be especially important when working with large, complex datasets where multiple models may be applicable.

In order to measure classification performance, we need to use a performance metric appropriate for the task at hand. Next’s let’s understand what this means.

Techniques for Measuring Classification Performance

This first part of the tutorial presents essential techniques for measuring the performance of classification models, including confusion matrix, error metrics, and roc curves. But why are there so many different techniques? Isn’t it enough to calculate the rate between correct and false classifications?

The answer depends on the balance of the class labels and their importance. Let’s compare a simple two-class case vs. a more complex one. In the most simple case, the following applies:

- The class labels in the sample are perfectly balanced (for example, 50 positives and 50 negatives).

- Both class labels are equally important, so it does not matter if the model is better at predicting class one or two.

In this case, we can measure the model performance as the rate between correctly predicted labels and those that a model falsely predicted. It is as simple as that. However, most classification problems are more complex:

- The class labels are imbalanced, so the model encounters one class more often than the other.

- One class is more important than the other. For example, consider a binary classification problem that aims to identify the few positive cases from a sample with many negative ones. Especially in disease detection, it is crucial that the model correctly identifies the few positive cases, even if some of the observations classified as positive are negative.

Confusion matrix and error techniques help us objectively evaluate such models built for more complex problems.

The Confusion Matrix

A confusion matrix is an essential tool for evaluating a classification model. The confusion matrix is a table with four combinations of predicted and actual values for a problem where the output may include two classes (negative and positive). As a result, each prediction falls into one of the following four squares:

- True Positives (TP): the outcome from a prediction is “positive,” and the actual value is also “positive.”

- False Positives (FP): The model predicted a positive value, but this prediction is false.

- True Negatives (TN): Predicted was a negative value, which is correct.

- False Negatives (FN): The model predicted a negative value while the actual class was positive.

We can assign each classification to a cell in the matrix. The diagonal contains the correctly classified cases whose actual class matches the predicted class. All other cells outside the diagonal represent possible errors. Using the confusion matrix, you can see at a glance how well the model works and what errors it makes.

The confusion matrix is the basis for calculating various error metrics, which we will look at in more detail in the following section.

Metrics for Measuring Classification Errors

To objectively measure the performance of a classifier, we can count up the cases in the different squares and use this information to calculate essential error metrics, including accuracy, precision, recall, f-1 score, and specificity.

Precision

Precision is a metric for the rate of missed positive values. Mathematically, it is the sum of true positives divided by the sum of False Positives and True Positives.

In other words, it measures the ability of a classification model to identify the relevant data points without misclassifying too many irrelevant cases.

Accuracy

Accuracy tells us the rate of the positive values that were classified correctly. It is calculated as the sum of all correct classifications divided by the number of false positives.

The usefulness of Accuracy ends when the class labels are imbalanced so that one class is underrepresented. The Accuracy can be misleading as it can become nearly 100% even if the classification model has not identified any of the data points in the underrepresented class. If your data is imbalanced, you should combine accuracy with the Recall.

F1-Score

The F1-Score combines Precision and Recall into a single metric. It is calculated as the harmonic mean of Precision and Recall.

The F1-Score is a single overall metric based on precision and recall. We can use this metric to compare the performance of two classifiers with different recall and precision.

Recall (Sensitivity)

Recall, sometimes called “Sensitivity,” measures the percentage of correctly classified positives among the entire sum of actual positives. We calculate it as the number of True Positives divided by the False Negatives and True Positives.

The Recall is particularly helpful if we deal with an imbalanced dataset, for example, when the goal is to identify a few critical cases among a large sample.

Specificity

We calculate the number of negative samples. It is also called the True-Negative Rate and plays a vital role in the ROC Curve, which we will look at in more detail in the following section.

None of the five metrics is sufficient to measure the model performance. We, therefore, use different metrics in combination. Note the following rules:

- If the classes in the dataset are balanced, measure performance using Accuracy.

- If the dataset is imbalanced or one class is more important than the other, look at Recall and Precision.

- For classification problems where you want to compare different models with similar recall and precision, use the F1Score.

Decision Boundary

A classifier determines class labels by calculating the probabilities of samples falling into a particular category. Since the probabilities are continuous values between 0.0 and 1.0, we use a decision boundary to convert them to class labels. The default threshold for a binary classifier is 0.5. Samples with probabilities above 0.5 are assigned to the first class, and samples below 0.5 to the second class.

In practice, we often encounter classification problems, where the cost of an error varies between class labels. In such cases, we can alter the decision boundary to give one of the classes a higher priority. Consider the case of credit card fraud detection. In this case, it is critical for service providers to reliably detect the few fraud cases among the many legitimate credit card transactions. We can alter the decision threshold to increase the probability that the model detects fraud (high True Positive rate). The cost of detecting more fraud is a higher number of transactions that the model misclassifies as fraud. However, in this particular example, this is acceptable because the service provider can quickly resolve misunderstandings with the customer.

The ROC Curve

The ROC curve is another helpful tool to measure classification performance and is particularly useful for comparing different classification models’ performance. ROC stands for “Receiver Operating Characteristic.” The ROC curve is a graphical plot that illustrates the diagnostic ability of a binary classifier system as its discrimination threshold is varied. The curve emerges when we plot the true positive rate (TPR) against the false positive rate (FPR) at various threshold settings.

The more the ROC curve tends to the upper left corner, the better the performance of the classification model. A perfect classifier would show a point in the upper left corner or coordinate (0,1), which is the ideal point for a diagnostic test. This is because a point at (0,1) indicates that the classifier has a 100% true positive rate and a 0% false positive rate. A curve near the diagonal indicates that the True Positive Rate and False Positive Rate are equal, which corresponds to the expected prediction result of a random classifier with no predictive power. If the ROC curve remains significantly below the diagonal, this indicates a classifier with inverse prediction power.

The ROC for classification models is not necessarily a curve and often runs as a jumpy line with several plateaus. Plateaus range where changes to the threshold do not change the classification results. Curves with plateaus can signify tiny sample sizes, but they may also have other reasons.

Measuring Classification Performance in Python (Two-Class)

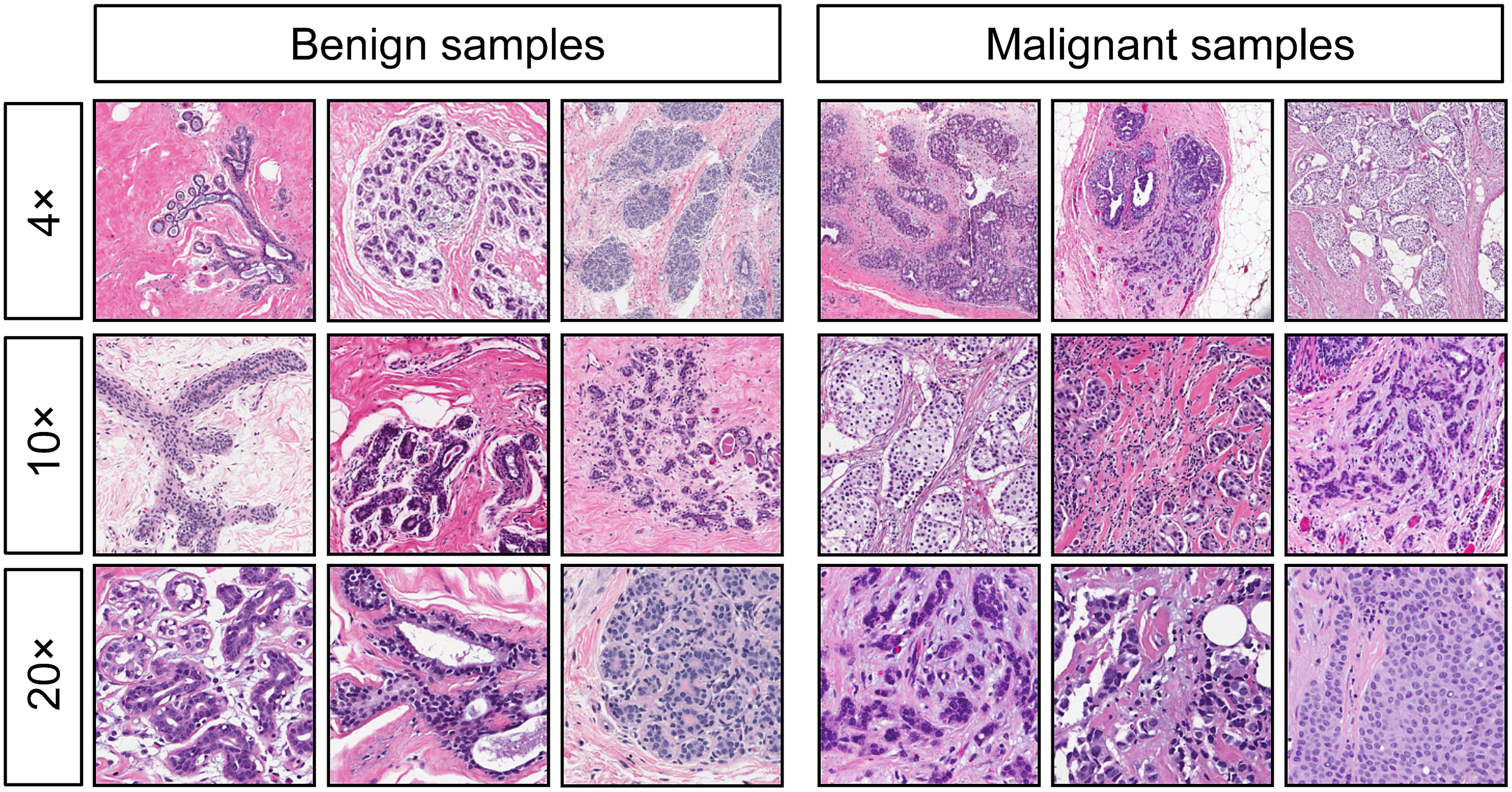

In this tutorial, we will show how to implement various techniques for evaluating classification models using a breast cancer dataset and a simple logistic regression model in Python with Scikit-Learn. Abnormal changes in the breast may be a sign of cancer and need to be investigated. However, changes are not necessarily malignant and, in many cases, are benign. We will work with a breast cancer dataset and train a machine learning classifier to make this distinction (benign/malignant). We will use the model to predict the type of breast cancer based on various characteristics and explore how machine learning can be applied in the life sciences to support medical diagnostics. After training the model, we will use the Confusion Matrix, Error Metrics, and the ROC Curve to measure its performance.

About the Breast Cancer Dataset

The breast cancer dataset contains 569 samples, with 30 features derived from digitized images of tissue samples. The features in the dataset describe the characteristics of the cell nuclei present in the image, including color, size, and symmetry. In addition, the dataset includes a binary target variable that indicates whether the sample is benign or malignant. 212 Samples are malignant, and 357 are benign.

You can find more information on the dataset on the UCI.edu webpage. The breast cancer dataset is included in the scikit-learn package, so there is no need to download the data upfront.

Prerequisites

Before starting the coding part, make sure that you have set up your Python 3 environment and required packages. If you don’t have an environment, follow this tutorial to set up the Anaconda environment. Also, make sure you install all required packages. In this tutorial, we will be working with the following standard packages:

- pandas

- NumPy

- math

- matplotlib

- scikit-learn

You can install packages using console commands:

pip install <package name> conda install <package name> (if you are using the anaconda packet manager)

Step #1 Loading the Data

We begin by loading the cancer dataset from scikit-learn. Then we display a list of the features and plot the balance of our classification target, the two tissue types. “1” is type “benign,” and 0 corresponds to type “malignant.”

# A tutorial for this file is available at www.relataly.com import numpy as np import pandas as pd import seaborn as sns import matplotlib.pyplot as plt from sklearn.model_selection import train_test_split from sklearn.ensemble import RandomForestClassifier from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score, confusion_matrix, classification_report, roc_auc_score, plot_roc_curve from sklearn.model_selection import cross_val_predict from sklearn import datasets df = datasets.load_breast_cancer(as_frame=True) df_dia = df.data df_dia['cancer_type'] = df.target plt.figure(figsize=(16,2)) plt.title(f'labels') fig = sns.countplot(y="cancer_type", data=df_dia) df_dia.head()

The barplot shows more benign observations among the sample than malignant ones.

Step #2 Data Preparation and Model Training

Next, we will prepare the data and use it for training a random decision forest classifier. It is important to remember that the performance of a classifier is dependent on the specific data it is trained on. Therefore, it is crucial to evaluate the classifier using a separate, unseen test dataset to avoid overfitting and ensure that the classifier generalizes well to new data. The code below therefore splits the data into train and test datasets.

# Select a small number of features that we use as input to the classification model features = ['carwidth', 'carlength'] df_base = df[features + ['Price_label']] # Separate labels from training data X = df_base[features] #Training data y = df_base['Price_label'] #Prediction label # Split the data into x_train and y_train data sets X_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.5, random_state=0)

Now that we have prepared the data, it is time to train our classifier. We use a random forest algorithm from the Scikit-learn package. If you want to learn more about this topic, check out the relataly tutorials on random forests.

# Create the Random Forest Classifier dfrst = RandomForestClassifier(n_estimators=3, max_depth=4, min_samples_split=6, class_weight='balanced') ranfor = dfrst.fit(X_train, y_train) y_pred = ranfor.predict(X_test)

After running the code, you have a trained classifier.

Step #3 Creating a Confusion Matrix

Next, we will create the confusion matrix and several standard error metrics. First, we create the matrix by running the code below. Remember that the matrix will contain only the tabular data without any visualization. To illustrate the results in a heatmap, we first need to plot the matrix. We will use the heatmap function from the seaborn package for this task.

# Create heatmap from the confusion matrix

def createConfMatrix(class_names, matrix):

class_names=[0, 1]

tick_marks = [0.5, 1.5]

fig, ax = plt.subplots(figsize=(7, 6))

sns.heatmap(pd.DataFrame(matrix), annot=True, cmap="Blues", fmt='g')

ax.xaxis.set_label_position("top")

plt.title('Confusion matrix')

plt.ylabel('Actual label'); plt.xlabel('Predicted label')

plt.yticks(tick_marks, class_names); plt.xticks(tick_marks, class_names)

# Create a confusion matrix

cnf_matrix = confusion_matrix(y_test, y_pred)

createConfMatrix(matrix=cnf_matrix, class_names=[0, 1])

Next, we calculate the error metrics (accuracy, precision, recall, f1-score). You can do this by using the separate functions from the Scikit-learn package. Alternatively, you can also use the classification report, which contains all these error metrics.

# Calculate Standard Error Metrics

print('accuracy: {:.2f}'.format(accuracy_score(y_test, y_pred)))

print('precision: {:.2f}'.format(precision_score(y_test, y_pred)))

print('recall: {:.2f}'.format(recall_score(y_test, y_pred)))

print('f1_score: {:.2f}'.format(f1_score(y_test, y_pred)))

# Classification Report (Alternative)

results_log = classification_report(y_test, y_pred, output_dict=True)

results_df_log = pd.DataFrame(results_log).transpose()

print(results_df_log)accuracy: 0.94 precision: 0.97 recall: 0.94 f1_score: 0.95

Step #4 ROC and AUC

Finally, let’s calculate the ROC and the Area under the Curve (AUC).

# Compute ROC curve

fig, ax = plt.subplots(figsize=(10, 6))

RocCurveDisplay.from_estimator(ranfor, X_test, y_test, ax=ax)

plt.title('ROC Curve for the Car Price Classifier')

plt.show()

The ROC tells us, that the model already performs quite well. However, we want to know it precisely. By running the code below, you can calculate the AUC.

# Calculate probability scores y_scores = cross_val_predict(ranfor, X_test, y_test, cv=3, method='predict_proba') # Because of the structure of how the model returns the y_scores, we need to convert them into binary values y_scores_binary = [1 if x[0] < 0.5 else 0 for x in y_scores] # Now, we can calculate the area under the ROC curve auc = roc_auc_score(y_test, y_scores_binary, average="macro") auc # Be aware that due to the random nature of cross validation, the results will change when you run the code

0.9035191562634525

Summary

This tutorial has shown how to evaluate the performance of a two-label classification model. We started by introducing the concept of the confusion matrix and how it can be used to evaluate the performance of a classifier. We then discussed various error metrics, such as accuracy, precision, and recall, and how we can use them to gain a better understanding of the classifier’s performance. Next, we discussed the ROC curve and how it can be used to visualize the trade-offs between precision and recall for different thresholds of the classifier. We also discussed how we could use the ROC curve to compare the performance of different classifiers. In the second part, we have applied the different tools and techniques to the practical example of a breast cancer classifier. We used the confusion matrix and error metrics to evaluate the classifier and the ROC curve to compare its performance.

Overall, this tutorial has provided an overview of the tools and techniques that are commonly used to evaluate the performance of a classification model. By understanding and applying these tools and techniques, we can gain a better understanding of how well a classifier is performing and make informed decisions about whether it is ready for production.

I hope this article helped you understand how to measure the performance of classification models. If you have any questions or feedback, please let me know. And if you are looking for error metrics to measure regression performance, check out this tutorial on regression errors.

Sources and Further Reading

- David Forsyth (2019) Applied Machine Learning Springer

- Andriy Burkov (2020) Machine Learning Engineering

- https://www.kurzweilai.net/pigeons-diagnose-breast-cancer-on-x-rays-as-well-as-radiologists

The links above to Amazon are affiliate links. By buying through these links, you support the Relataly.com blog and help to cover the hosting costs. Using the links does not affect the price.

Hi Florian, great article! Just a small caveat: shouldn’t the terms ‘false positive’ and ‘false negative’ be swapped in the first confusion matrix drawing? Otherwise, very concise and thorough explanations! Thank you!